ORIGINAL RESEARCH ARTICLE

A novel model of artificial intelligence based automated image analysis of CT urography to identify bladder cancer in patients investigated for macroscopic hematuria

Suleiman Abuhasaneina,b, Lars Edenbrandtc,d, Olof Enqviste,f, Staffan Jahnsong, Henrik Leonhardtg,i, Elin Trägårdhj,k, Johannes Ulénf, Henrik Kjölhedea,l

aDepartment of Urology, Institute of Clinical Science, Sahlgrenska Academy, University of Gothenburg, Göteborg, Sweden; bDepartment of Surgery, Urology section, NU Hospital Group, Uddevalla, Region Västra Götaland, Sweden; cDepartment of Clinical Physiology, Sahlgrenska University Hospital, Göteborg, Sweden; dDepartment of Molecular and Clinical Medicine, Institute of Medicine, Sahlgrenska Academy, University of Gothenburg, Göteborg, Sweden; eDepartment of Electrical Engineering, Chalmers University of Technology, Göteborg, Sweden; fEigenvision AB, Malmö, Sweden; gDepartment of Clinical and Experimental Medicine, Division of Urology, Linköping University, Linköping, Sweden; hDepartment of Radiology, Institute of Clinical Science, Sahlgrenska Academy, University of Gothenburg, Göteborg, Sweden; iDepartment of Radiology, Sahlgrenska University Hospital, Region Västra Götaland, Göteborg, Sweden; jDepartment of Clinical Physiology and Nuclear Medicine, Lund University and Skåne University Hospital, Malmö, Sweden; kWallenberg Centre for Molecular Medicine, Lund University, Lund, Sweden; lDepartment of Urology, Sahlgrenska University Hospital, Region Västra Götaland, Göteborg, Sweden

ABSTRACT

Objective: To evaluate whether artificial intelligence (AI) based automatic image analysis utilising convolutional neural networks (CNNs) can be used to evaluate computed tomography urography (CTU) for the presence of urinary bladder cancer (UBC) in patients with macroscopic hematuria.

Methods: Our study included patients who had undergone evaluation for macroscopic hematuria. A CNN-based AI model was trained and validated on the CTUs included in the study on a dedicated research platform (Recomia.org). Sensitivity and specificity were calculated to assess the performance of the AI model. Cystoscopy findings were used as the reference method.

Results: The training cohort comprised a total of 530 patients. Following the optimisation process, we developed the last version of our AI model. Subsequently, we utilised the model in the validation cohort which included an additional 400 patients (including 239 patients with UBC). The AI model had a sensitivity of 0.83 (95% confidence intervals [CI], 0.76–0.89), specificity of 0.76 (95% CI 0.67–0.84), and a negative predictive value (NPV) of 0.97 (95% CI 0.95–0.98). The majority of tumours in the false negative group (n = 24) were solitary (67%) and smaller than 1 cm (50%), with the majority of patients having cTaG1–2 (71%).

Conclusions: We developed and tested an AI model for automatic image analysis of CTUs to detect UBC in patients with macroscopic hematuria. This model showed promising results with a high detection rate and excessive NPV. Further developments could lead to a decreased need for invasive investigations and prioritising patients with serious tumours.

KEYWORDS: Artificial intelligence; bladder cancer; computed tomography; convolutional neural networks; deep learning; hematuria

Citation: Scandinavian Journal of Urology 2024, VOL. 59, 90–97. https://doi.org/10.2340/sju.v59.39930.

Copyright: © 2024 The Author(s). Published by MJS Publishing on behalf of Acta Chirurgica Scandinavica. This is an Open Access article distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), allowing third parties to copy and redistribute the material in any medium or format and to remix, transform, and build upon the material, with the condition of proper attribution to the original work.

Received: 21 January 2024; Accepted: 17 April 2024; Published: 2 May 2024

CONTACT Suleiman Abuhasanein suleiman.abuhasanein@gu.se Department of Urology, Institute of Clinical Science, Sahlgrenska Academy, University of Gothenburg, SE-413 90 Gothenburg, Sweden

Competing interests and funding: The authors have no conflict of interest to declare.

The study was supported by grants from the Swedish state under the agreement between the Swedish government and the county councils, the ALF-agreement (ALFGBG-873181), and by grants from Department of Research and Development, NU-Hospital Group.

Introduction

Urinary bladder cancer (UBC) is the 10th most common diagnosed cancer in the world [1], and is the second cause of mortality among urological cancers [2]. The most common sign of UBC is macroscopic hematuria, which is investigated by cystoscopy and computed tomography-urography (CTU) [3]. The European Society of Urogenital Radiology (ESUR) described CTU as a comprehensive multiphasic imaging technique for the entire urinary tract employing the intravenous injection of contrast medium [4].

Computed tomography-urography is an accurate and non-invasive test for detecting UBC in patients at risk for this disease due to its ability to detect early contrast media enhancement [5]. Likewise, it has been suggested that CTU, including an early corticomedullary phase (CMP), could potentially offer a comparable alternative to cystoscopy in detecting of UBC [6]. However, the process of interpreting CTU relies on expertise and can be time-consuming, particularly when dealing with hundreds of CTU slices. Moreover, the potential of overlooking a significant pathology cannot be discounted [7].

Lately, artificial intelligence (AI) has gained momentum not only in the diagnosis of urological malignancies but also in their treatment and predictive analytics [8]. AI represents an emerging domain within computer science, with the goal to replicate cognitive processes resembling human learning, logic, and problem solving [9]. Influenced by the structural organisation observed in biological neural networks, a certain type of AI models utilises multiple layers of interconnected artificial neurons to gain insights into complex features with spatial correlations, such as CTU images, a type of models known as convolutional neural networks (CNNs) [10]. The objective of this study therefore, was to develop and evaluate a CNN-based automatic image analysis for interpreting CTU scans to detect/rule out UBC in patients presenting with macroscopic hematuria.

Materials and methods

The study is a retrospective observational case-control study, reported according to CLAIM (Checklist for AI in Medical Imaging) [11].

Study patients

All patients diagnosed with histopathologically verified UBC in the NU Hospital Group, Uddevalla, Sweden, between 1st November 2016 and 31st December 2019, and a random sample of patients who had macroscopic hematuria in the same period but without detected UBC were included as the training cohort. The validation cohort was formed in the same way, namely by including all patients with UBC and randomly selected patients who had undergone evaluations for macroscopic hematuria, but without detected UBC, within the same institution during the period 1st January 2020 to 31st December 2021.

Patients with a prior history of UBC or upper tract urothelial carcinoma (UTUC) were excluded, as were patients with CTUs lacking contrast medium administration, unenhanced phase (UP), or CMP. Furthermore, CTU images displaying significant artifacts (e.g. linked to hip prostheses) were also excluded. Failure of segmentation due to patient’s movement or mismatch of CTU series was another exclusion criterium. However, patients with non-optimal bladder filling volume were included.

The number of tumours and the largest size of the largest tumour (estimated during transurethral resection of tumour in the bladder (TURBT) using the loop of the resectoscope (7 mm) as a reference) were recorded in all UBC patients. Clinical stage according to tumour, node, metastasis (TNM), 8th edition [12], and tumour grade according to the World Health Organization (WHO) 1999 classification [13] were recorded. The study was approved by the Swedish Ethical Review Authority (Reference number. 2022-05590-02).

Imaging technology

Prior to the examination, patients were instructed to drink 1,000 mL of water and abstain from urinating for approximately 90 min before the CTU procedure. The CTU was conducted with the patient in a supine position, utilising a 64-detector scanner (General Electric, Boston, USA, or Siemens Medical Solutions, Forchheim, Germany). A four-phase CTU protocol was initiated, including UP followed by a CMP at bolus tracking + 20 s following intravenously administration of iodinated contrast medium (Iohexol 350 mg/mL; Omnipaque; GE Healthcare, Waukesha, WI, USA). The calculation of contrast medium volume and injection rate was performed using the OmniVis 5.1 programme (GE Healthcare). The calculation is determined by age, gender, height, weight, and creatinine level, with a maximum of 123 ml of contrast medium injected. For instance, a male patient aged 60, with a height of 185 cm, weight of 100 kg, and a creatinine level of 75 µmol/L, would require 123 ml of contrast medium with an injection rate of 4.9 ml/s.

This was followed by nephrographic phase (NP) at bolus tracking + 40 s. After a short mobilisation of the patient, an excretory phase (EP) >7.5 min after administration of contrast medium was obtained. All four phases were reduced with 40% mAs with GE or made with a quality reference mAs Siemens. Collimation of 0.6 mm, pitch of 1.4 and 120 kVp were applied in all phases. No diuretic drugs were given, and not all patients had CTU with nephrogenic phase. This protocol was employed in both training and validation cohorts.

Image processing and AI based automatic image analysis

All included CTUs were anonymised and copied to a separate study database which is a cloud-based annotation platform (https://www.recomia.org) [14]. After exclusion of non-eligible CTUs, a urologist (SA) manually labelled all detectable UBC lesions in all axial reconstructions of CTUs in both UP and CMP in the training cohort. The labelling was conducted in accordance with the cystoscopic findings, which were corroborated by the presence of cancer findings in the pathological reports. Manual labelling was restricted to axial images only, as the model is voxel-based and automatically applies labelling to all the reconstructed projections. No manual labelling was done for CTUs in the validation cohort since the cystoscopy findings were used as reference method. The excretory phase was not utilised due to the additional complexities of the changed anatomy after mobilisation of the patient.

Initially, we trained this model using a subset of the training cohort. An internal validity test on the remaining part of the training cohort was then performed resulting in the final AI model which was employed for testing on the validation cohort as described below. A CNN based on the 3D-Unet architecture [15] was trained to classify each pixel as either background or UBC. The input to the network has three channels: one channel with the image from UP, one channel with the CMP image, and one channel with an automatic segmentation of the urinary bladder. The automatic segmentation was performed using an off-the-shelf model trained on a different cohort [16].

The model was trained using categorical cross entropy and was optimised using Adam with Nesterov momentum with an initial learning rate of 0.0001 reduced by 2.5% each epoch. Each epoch consisted of 20 000 randomly selected patches from the data, selected such that centre point of the patch was from UBC 50% of the time and background 50% of the time. In-order to improve generalisation training, patches were augmented with random offsets in Hounsfield unit (HU) −100 to +100 for the CTU images, random scaling −10% to +10 for all images, and random rotations −8.5 to +8.5 degrees for all images. After 50 epochs, the model was run on all training images and the sampling was updated to sample high loss pixels more often. The model was then trained for another 50 epochs. Following training and final acceptance of the model, the CTUs in the validation cohort were analysed. These were classified as either showing UBC (positive) or not (negative).

Statistical analysis

Descriptive statistics were used for patient characteristics (age at CTU time and sex) and tumour characteristics (clinical tumour stage, tumour size and number of tumours). Continuous data were presented as median and inter quartile range (IQR). The sensitivity and specificity were calculated at a patient-level basis with 95%- confidence intervals (CI). Positive predictive value (PPV) and negative predictive value (NPV) were calculated with 95% CI, based on the prevalence of UBC in the entire validation cohort during the study period. Statistical analysis was performed using Statistical Package for the Social Sciences (SPSS) version 29 (IBM Corp., Armonk, NY, USA).

Results

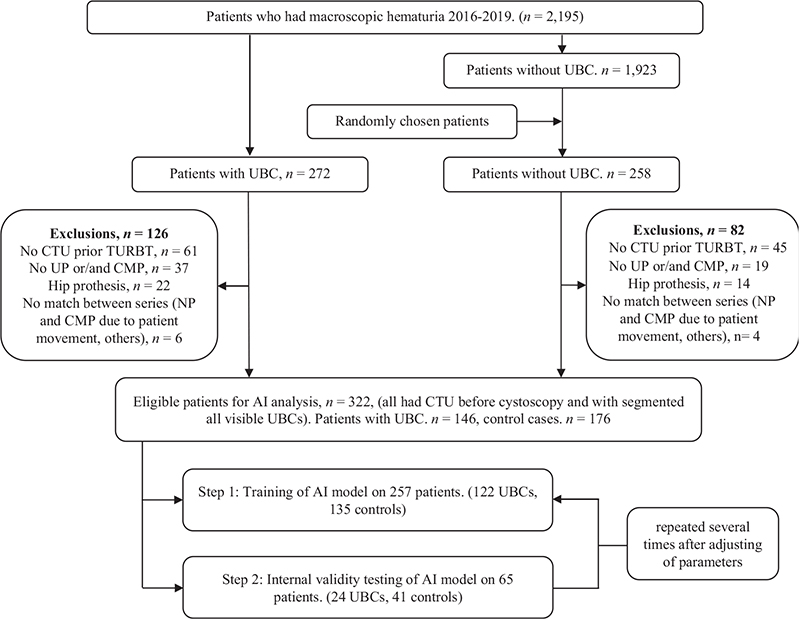

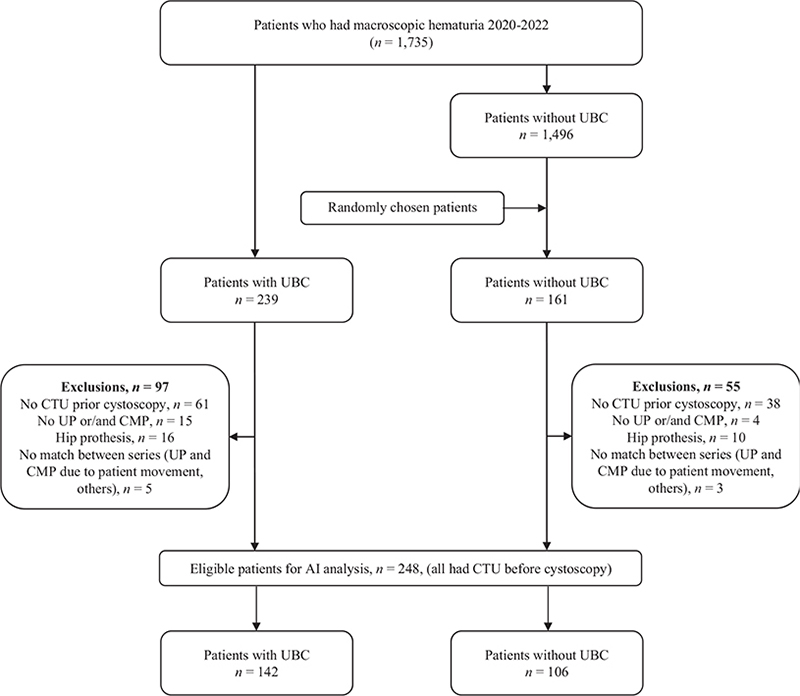

All 2,195 patients evaluated for macroscopic hematuria from 1st November 2016 to 31st December 2019, were enrolled in the study as the training cohort. The CTUs of all 272 patients diagnosed with UBC were selected for training, as were 258 randomly selected patients without UBC, for a total of 530 (Figure 1A). After exclusions, 257 CTUs were used for the training of the AI model and 65 CTUs for the internal validity testing. The final AI model was evaluated in the validation cohort, which consisted of all 1,735 patients evaluated for macroscopic hematuria from 1st January 2020 to 31st December 2021. The 239 patients with UBC in this period and 161 randomly selected patients who assessed negative for UBC, were used for analysis (Figure 1B). After exclusions, the validation was performed on a UBC group comprising 142 patients (57%) and a control group with 106 patients (43%).

Figure 1A. Flow diagram showing inclusion of patients in the training cohort.

AI: artificial intelligence; CMP: corticomedullary phase; CTU: computed tomography urography; UBC: urinary bladder cancer; UP: unenhanced phase.

Figure 1B. Flow diagram showing inclusion of patients in the validation cohort.

AI: artificial intelligence; CMP: corticomedullary phase; CTU: computed tomography urography; UBC: urinary bladder cancer; UP: unenhanced phase.

Within the UBC group, a greater proportion of patients were males (123 patients, 87%), compared to the control group (66 patients, 62%). Additionally, the patients in the UBC group were older, with a median age of 75 years (IQR 68–80) in contrast to the control group, (71 years, IQR 64–77). In the UBC group, 84% of the pathologically verified tumours were detected in the CTUs according to the original CTU reports. Our AI model detected UBC in nine cases where the initial CTU report did not. Forty-five per cent of tumours in UBC group were solitary and 73% were smaller than 3 cm. Furthermore, 78 patients (55%) had cTaG1–2 or low grade UBC, and the remaining (45%) had high grade UBC (cTaG3, cTis and cT1+) (Table 1).

| Variable | Patients with UBC | Patients without UBC | |

| No. patients | % of the row | 142 (57) | 106 (43) |

| Gender | Male | 123 (87) | 66 (62) |

| Age | Median (IQR) | 75 (68–80) | 71 (64–77) |

| Age, n (%) | ≥ 74 years | 80 (56) | 41 (39) |

| Radiologist’s interpretation of CTU* | Visible tumour | 119 (84) | |

| Number of tumours** | Solitary | 80 (56) | |

| Size of tumour** | ≤ 10 mm | 24 (17) | |

| 11–30 mm | 80 (56) | ||

| ≥ 31 mm | 38 (27) | ||

| Tumour stage and grade*** | cTaG1–2 | 78 (55) | |

| cTaG3, Tis, T1 | 38 (27) | ||

| cT2+ | 26 (18) | ||

| TNM | N+ | 5 (3.5) | |

| M1 | 3 (2) | ||

| Figures represent number of patients (% of numbers of the column) if not otherwise indicated. (AI: artificial intelligence; CTU: computed tomography urography; IQR: inter-quartile range; TNM: tumour, node, metastasis; TURBT: transurethral resection of tumour in bladder) | |||

| *In the initial radiological report of CTU | |||

| **Based on reports after transurethral resection of tumour in bladder (TURBT) | |||

| ***Based on reports after TURBT and second look resection if indicated. | |||

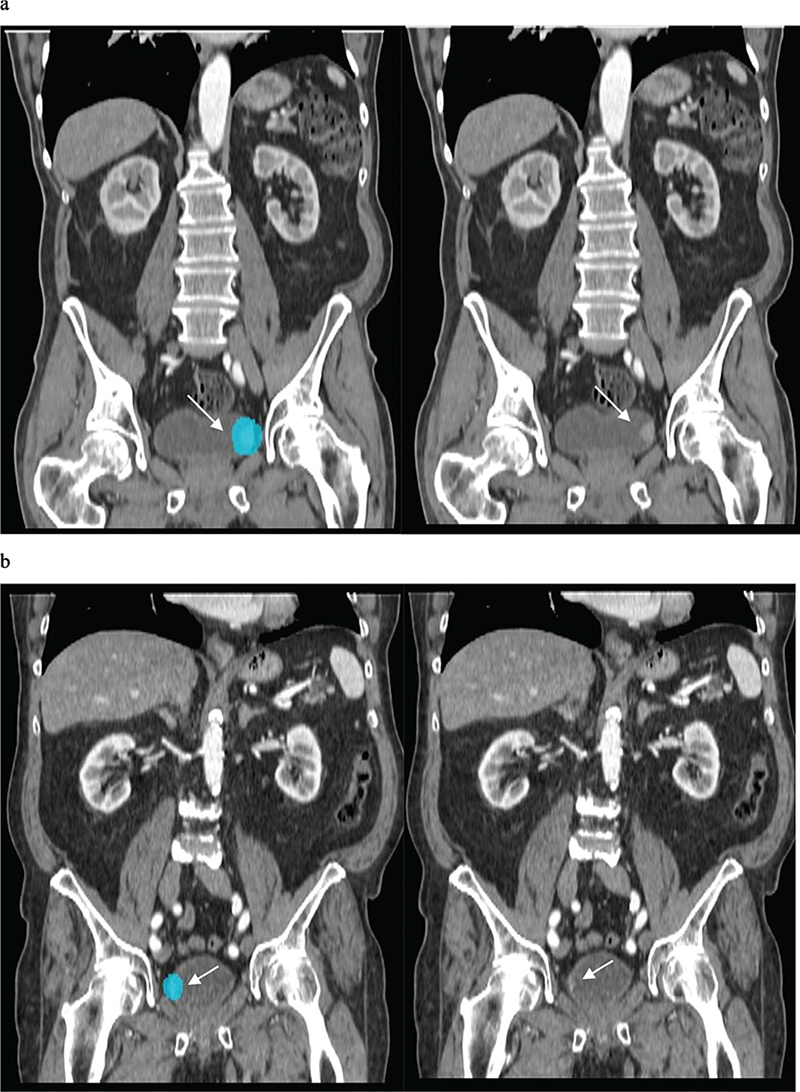

The AI-based automatic image analysis successfully classified 118 of 142 patients with UBC (Figure 2) and 81 of 106 patients in the control group resulting in sensitivity and specificity of 0.83 (95% CI, 0.76–0.89) and 0.76 (95% CI 0.67–0.84), respectively. With 24 false negative cases and 25 false positive cases, PPV and NPV were 0.37 (95% CI 0.29–0.45) and 0.97 (95% CI 0.95–0.98), respectively. In subgroup analyses, sensitivity ranged from 0.84 to 0.89, and NPV ranged from 0.98 to 0.99 (for further information, please refer to Table 2).

| Variable | TP | TN | FP | FN | Prev. | Sensitivity | Specificity | PPV | NPV |

| All patients | 118 | 81 | 25 | 24 | 0.14 | 0.83 (0.76–0.89) | 0.76 (0.67–0.84) | 0.37 (0.29–0.45) | 0.97 (0.95–0.98) |

| Gender | |||||||||

| Male | 102 | 46 | 20 | 21 | 0.18 | 0.83 (0.75–0.89) | 0.70 (0.57–0.80) | 0.44 (0.35–0.53) | 0.94 (0.91–0.96) |

| Female | 16 | 35 | 5 | 3 | 0.06 | 0.84 (0.60–0.97) | 0.88 (0.73–0.96) | 0.30 (0.17–0.50) | 0.99 (0.97–1.00) |

| Age | |||||||||

| <70 years | 33 | 37 | 10 | 6 | 0.08 | 0.85 (0.70–0.94) | 0.79 (0.64–0.89) | 0.26 (0.16–0.38) | 0.98 (0.96–0.99) |

| ≥70 years | 85 | 44 | 15 | 18 | 0.17 | 0.83 (0.74–0.89) | 0.76 (0.62–0.85) | 0.40 (0.30–0.51) | 0.95 (0.69–0.82) |

| Malignancy | |||||||||

| Low grade* | 61 | 81 | 25 | 17 | 0.08 | 0.78 (0.64–0.87) | 0.76 (0.67–0.84) | 0.22 (0.17–0.29) | 0.98 (0.96–0.98) |

| High grade** | 57 | 81 | 25 | 7 | 0.06 | 0.89 (0.79–0.96) | 0.76 (0.67–0.84) | 0.19 (0.15–0.26) | 0.99 (0.98–1.00) |

| AI: artificial intelligence; PPV: positive predictive value; NPV: negative predictive value; CI: confidence interval; FN: false negative; FP: false positive; Prev.: prevalence; TN: true negative; TP: true positive. | |||||||||

| *Low grade UBCs are those with cTaG1–2. | |||||||||

| **High grade UBCs are those with cTaG3, cTis and cT1+ | |||||||||

Figure 2. AI based automatic image model could correctly identify two different tumours (patients in the validation cohort) shown here in the coronal reconstruction of the corticomedullary phase in CTU. AI: artificial intelligence; CTU: computed tomography urography).

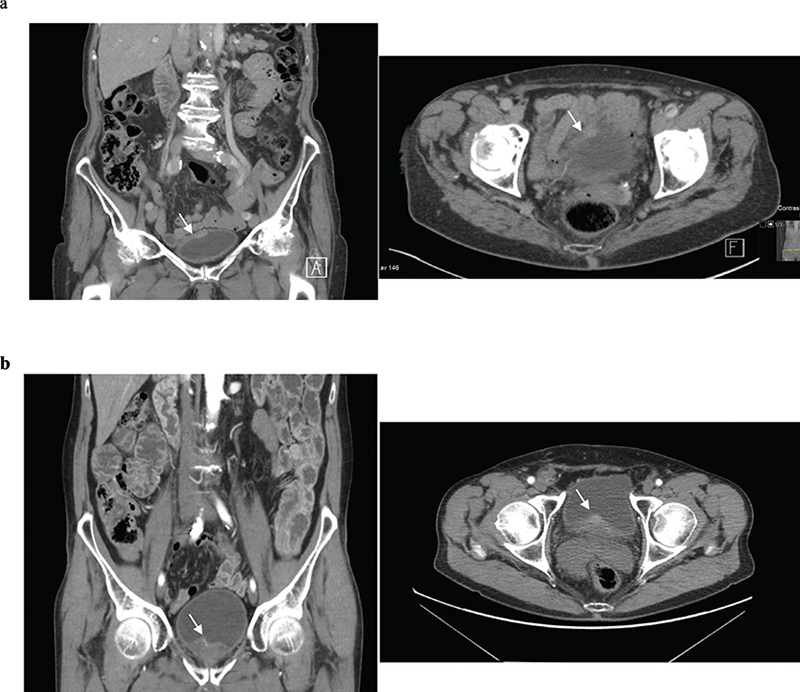

A majority of tumours in the false negative group (n = 24) were solitary (67%), and smaller than 1 cm (50%). Most patients had cTaG1–2 (71%) while two patients (8%) had cT2+ tumours (Table 3). Of these latter two cases, the first, an 82-year-old female had a flat tumour measuring 2 cm located apically within a poorly distended urinary bladder. This tumour had extensive squamous cell differentiation. In the other case, an 80-year-old male with prostate hyperplasia presented with a 1 cm tumour situated in the neck of the urinary bladder. This tumour also had squamous cell differentiation (Figure 3).

| Variable | Patients with UBC | |

| Radiologist’s interpretation of CTU* | Visible tumour | 10 (42) |

| Number of tumours** | Solitary | 16 (67) |

| Size of tumour** | ≤ 10 mm | 12 (50) |

| 11–30 mm | 11 (46) | |

| ≥ 31 mm | 1 (4) | |

| Tumour stage and grade*** | cTaG1–2 | 17 (71) |

| cTaG3, Tis, T1 | 5 (21) | |

| cT2+ | 2 (8) | |

| TNM | N+ | 0 |

| M1 | 0 | |

| Figures represent number of patients (% of numbers of the column) if not otherwise indicated. | ||

| AI: artificial intelligence; CTU: computed tomography urography; TNM: tumour, node, metastasis. | ||

| *In the initial radiological report of CTU | ||

| **Based on reports after transurethral resection of tumour in bladder (TURBT) | ||

| ***Based on reports after TURBT and second look resection if indicated. | ||

Figure 3. False negative cases with cT2+ tumours shown here in coronal and axial reconstruction in the corticomedullary phase of CTU.

(a) A flat (sessile) 2 cm T2G3 tumour with squamous cell differentiation in the fundus of urinary bladder.

(b) A small 1 cm T2G3 tumour with squamous cell differentiation in the neck of urinary bladder.

CTU: computed tomography urography; TNM: tumour, node, metastasis.

Discussion

In this study, we developed and validated a novel CNN-based automatic image analysis model for the detection of UBC in patients with macroscopic hematuria. For all patients included in the study, we found a high sensitivity of 0.83 (95% CI, 0.76–0.89) and an NPV of 0.97 (95% CI 0.95–0.98) indicating that CNN-based image analysis could be reliable in identifying patients with UBC in CTU. In particular subgroups, such as female patients or patients with high-grade UBC, the model achieved a remarkably high NPV of 0.99. In the future, this could enable the development of distinct assessment algorithms tailored to specific patient subgroups by categorising CTU scans accordingly.

Interestingly, in nine cases, our AI model successfully detected UBC, while the initial radiological CTU report did not. Thus, our model demonstrated superior performance compared to human eyes in these cases. This represents an advancement of the findings reported by Gordon et al. [17] who developed a computerised segmentation tool for both the inner and outer bladder walls as part of an image analysis for CTU. While they had shown that the CNN-assisted tool is accurate in segmentation of both the inner and outer bladder walls, we could identify urinary bladder and detect UBC in it in about 83% of UBC cases.

In our study, there were 24 patients with UBC that were missed in AI analysis. These false negatives cases were extremely hard to see (Figure 3), likely because most were small (<10 mm, two dimensional measure during TURBT), and solitary. Furthermore, 71% were low grade UBC, and only two patients had small cT2+. While cystoscopy can therefore not be avoided based solely on the AI model, these cystoscopies could likely be scheduled with a lower priority.

In the two false negative cases with cT2+ tumours, the assessment of their CTUs posed certain challenges. In the case of the female patient, a small flat tumour was situated apically within a poorly distended urinary bladder, with small intestine nearby, making the assessment more intricate. On the other hand, the male patient’s assessment was complicated by factors such as the tumour’s small size being located in the bladder neck and the mixed attenuation of the prostate. In both patients, UBC had a significant squamous cell differentiation, revealing an intriguing issue that warrants further investigation to explore whether tumours with squamous cell differentiation have distinct or/and different visual characteristics in CTU.

Generally, the accuracy of AI models has been validated by a large meta-analysis that demonstrated a diagnostic accuracy of AI to be equivalent to that of healthcare professionals in detecting diseases from medical imaging [18]. Since CTU has already demonstrated promise as a non-invasive method for accurately identifying UBC [6], and AI-assisted detection and diagnostic tools are in development to be sufficiently precise in evaluating CTU scans; this can complement the work of radiologists, reducing the time needed in CTU evaluation and enhancing the quality of care for patients presenting with macroscopic hematuria and potentially conserving healthcare resources. It is essential to emphasise that CTU serves a broader purpose than solely assessing the urinary bladder. Radiologists can gain substantial advantages not only from its ability to detect UBC, but also from its ability to identify UTUC and various other abnormalities. As a result, additional research is warranted to create AI models for these purposes. On the other hand, the hope is that AI can efficiently identify normal cases, allowing radiologists to concentrate on spots flagged as pathological by the AI.

While other studies focussed on improving cystoscopic diagnosis of UBC using cystoscopic pictures [19–21], identifying complete response of UBC to neoadjuvant chemotherapy by AI-based CT computerised decision-support system [22], or assessment of UBC stage by a CNN model [23], no such studies were conducted in the context of AI-based assessment of CTU in order to primarily detect UBC. This was even confirmed by a comprehensive review of Borhani et al. [10] about AI usage in UBC detection and outcome prediction, revealing a scarcity of studies focussed on assessing AI usage within the realm of CTU for UBC detection purposes.

However, using AI in medical applications, especially in radiology, presents several challenges and concerns. AI models in radiology require vast amounts of high-quality data for training. In many cases, obtaining large, well-annotated datasets can be challenging. Additionally, integrating AI systems into existing healthcare infrastructure and ensuring compatibility with various medical devices and systems can be complex. This can hinder the seamless adoption of AI in radiology. Despite these challenges, AI has the potential to greatly benefit radiology and healthcare in general by improving diagnostic accuracy, reducing workload, and enhancing patient care. Another unresolved aspect pertains to accountability, that is determining who the patient can hold accountable for a missed cancer diagnosis in an AI model. Should they seek responsibility from the AI company, healthcare providers, radiologists, or urologists? This ethical dilemma underscores the need of in-depth discussions between patient organisations, physicians, and healthcare providers.

Computed tomography urography has previously been proposed as a reliable method for detecting UBC. In a study conducted by Helenius et al. [5] involving 435 patients, of whom 55 were diagnosed with UBC, CTU successfully identified UBC in 48 patients, achieving a sensitivity of 0.87 and NPV of 0.98. In our study, we achieved comparable outcomes with a sensitivity of 0.83 and an NPV of 0.97. However, unlike the focus of that study, which was on the overall effectiveness of CTU in detecting UBC, our study explored whether a specialised AI model could be used for this purpose. Indeed, similar performance was achieved, although with a loser specificity.

Our study has several limitations. Firstly, the AI model in the present study was validated exclusively with datasets from a single centre, raising the risk of overfitting. However, the study spanned over a considerable time period, allowing us to assess substantial number of diverse CTU scans. Secondly, CTUs of low quality were excluded from the study, limiting the general use of the model. This was done since this study was performed to evaluate the feasibility of using CNN-based models in detecting UBC. Further development will be necessary to increase the range of scans that can be evaluated. Lastly, the number of controls were limited in this study, which likely led to a poor specificity. Nevertheless, the high sensitivity and NPV in our study imply that if the AI-model generates negative results, it is highly probable that the patient truly does not have UBC which was the goal of our study. Further training with larger normal datasets should improve specificity as well. Enhancing the AI model, refining CTU, and striving to optimise CTU through better bladder distension are crucial for streamlining the investigation process for macroscopic hematuria.

Conclusion

We developed and tested a novel CNN-based automatic image analysis AI model to help radiologists in the initial evaluation of CTU of patients with macroscopic haematuria to efficiently detect and exclude UBC. This model showed promising results with a high detection rate and high NPV. Further developments could lead to a decreased need for invasive investigations and prioritising patients with serious tumours. Nevertheless, it is important to note that AI-based software for real-time image-guided decisions still need to undergo the necessary regulatory approvals and large multi-centre prospective studies with external validation to be applicable in a daily practice.

Acknowledgements

The authors thank Ms. Lena Knutsson for her technical support.

Ethical approval

The study was approved by the Swedish Ethical Review Authority (File No.2020-01127) with an approved amendment (No. 2022-05590-02) for adding more participants. The study was performed in accordance with the Declaration of Helsinki.

Data availability

The data used to support the findings of this study are available from the corresponding author upon reasonable request.

References

- [1] IARC. C.T. Estimated number of new cases in 2020, worldwide, both sexes, all ages. 2021 [cited 1st June 2021]. Available from: https://gco.iarc.fr/today/online-analysis-table

- [2] RBosetti C, Bertuccio P, Chatenoud L, et al. Trends in mortality from urologic cancers in Europe, 1970-2008. Eur Urol. 2011 Jul;60(1):1-15. https://doi.org/10.1016/j.eururo.2011.03.047

- [3] Trinh TW, Glazer DI, Sadow CA, et al. Bladder cancer diagnosis with CT urography: test characteristics and reasons for false-positive and false-negative results. Abdom Radiol (NY). 2018;43(3):663–671. https://doi.org/10.1007/s00261-017-1249-6

- [4] Van Der Molen AJ, Cowan NC, Mueller-Lisse UG, et al. CT urography: definition, indications and techniques. A guideline for clinical practice. Eur Radiol. 2008;18(1):4–17. https://doi.org/10.1007/s00330-007-0792-x

- [5] Helenius M, Brekkan E, Dahlman P, et al. Bladder cancer detection in patients with gross haematuria: computed tomography urography with enhancement-triggered scan versus flexible cystoscopy. Scand J Urol. 2015;49(5):377–381. https://doi.org/10.3109/21681805.2015.1026937

- [6] Abuhasanein S, Hansen C, Vojinovic D, et al. Computed tomography urography with corticomedullary phase can exclude urinary bladder cancer with high accuracy. BMC Urol. 2022;22(1):60. https://doi.org/10.1186/s12894-022-01009-4

- [7] Cha KH, Hadjiiski L, Samala RK, et al. Urinary bladder segmentation in CT urography using deep-learning convolutional neural network and level sets. Med Phys. 2016;43(4):1882. https://doi.org/10.1118/1.4944498

- [8] Drouin SJ, Yates DR, Hupertan V, et al. A systematic review of the tools available for predicting survival and managing patients with urothelial carcinomas of the bladder and of the upper tract in a curative setting. World J Urol. 2013;31(1):109–116. https://doi.org/10.1007/s00345-012-1008-9

- [9] Suarez-Ibarrola R, Hein S, Reis G, et al. Current and future applications of machine and deep learning in urology: a review of the literature on urolithiasis, renal cell carcinoma, and bladder and prostate cancer. World J Urol. 2020;38(10):2329–2347. https://doi.org/10.1007/s00345-019-03000-5

- [10] Borhani S, Borhani R, Kajdacsy-Balla A. Artificial intelligence: A promising frontier in bladder cancer diagnosis and outcome prediction. Crit Rev Oncol Hematol. 2022;171:103601. https://doi.org/10.1016/j.critrevonc.2022.103601

- [11] Mongan J, Moy L, Kahn, CE Jr. Checklist for artificial intelligence in medical imaging (CLAIM): a guide for authors and reviewers. Radiol Artif Intell. 2020;2(2):e200029. https://doi.org/10.1148/ryai.2020200029

- [12] Brierley J, Gospodarowicz M, Wittekind C. TNM classification of malignant tumours. 8th ed. John Wiley and Sons, Hoboken, New Jersey, USA; 2017.

- [13] Busch C, Algaba, F. The WHO/ISUP 1998 and WHO 1999 systems for malignancy grading of bladder cancer. Scientific foundation and translation to one another and previous systems. Virchows Arch. 2002;441(2):105–108. https://doi.org/10.1007/s00428-002-0633-x

- [14] Trägårdh E, Borrelli P, Kaboteh R, et al. RECOMIA-a cloud-based platform for artificial intelligence research in nuclear medicine and radiology. EJNMMI Phys. 2020;7(1):51. https://doi.org/10.1186/s40658-020-00316-9

- [15] Çiçek, Ö, Abdulkadir A, Lienkamp S, et al. 3D U-Net: Learning dense volumetric segmentation from sparse annotation. In medical image computing and computer-assisted intervention – MICCAI 2016. Cham: Springer International Publishing; 2016.

- [16] Edenbrandt L, Enqvist O, Larsson M, Ulén J. Organ Finder – a new AI-based organ segmentation tool for CT. MedRxiv. New York, United States; 2022.

- [17] Gordon MN, Hadjiiski LM, Cha KH, et al. Deep-learning convolutional neural network: Inner and outer bladder wall segmentation in CT urography. Med Phys. 2019;46(2): 634–648. https://doi.org/10.1002/mp.13326

- [18] Liu X, Faes L, Kale AU, et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: A systematic review and meta-analysis. The Lancet Digit Health. 2019;1(6): e271–e297. https://doi.org/10.1016/S2589-7500(19)30123-2

- [19] Ikeda A, Nosato H, Kochi Y, et al. Support system of cystoscopic diagnosis for bladder cancer based on artificial intelligence. J Endourol. 2020;34(3):352–358. https://doi.org/10.1089/end.2019.0509

- [20] Lorencin I, Anđelić N, Španjol J, Car Z. Using multi-layer perceptron with Laplacian edge detector for bladder cancer diagnosis. Artif Intell Med. 2020;102:101746. https://doi.org/10.1016/j.artmed.2019.101746

- [21] Eminaga O, Eminaga N, Semjonow A, Breil B. Diagnostic classification of cystoscopic images using deep convolutional neural networks. JCO Clin Cancer Inform. 2018;2:1–8. https://doi.org/10.1200/CCI.17.00126

- [22] Cha KH, Hadjiiski LM, Cohan RH, et al. Diagnostic accuracy of CT for prediction of bladder cancer treatment response with and without computerized decision support. Acad Radiol. 2019;26(9):1137–1145. https://doi.org/10.1016/j.acra.2018.10.010

- [23] Garapati SS, Hadjiiski L, Cha KH, et al. Urinary bladder cancer staging in CT urography using machine learning. Med Phys. 2017;44(11):5814–5823. https://doi.org/10.1002/mp.12510