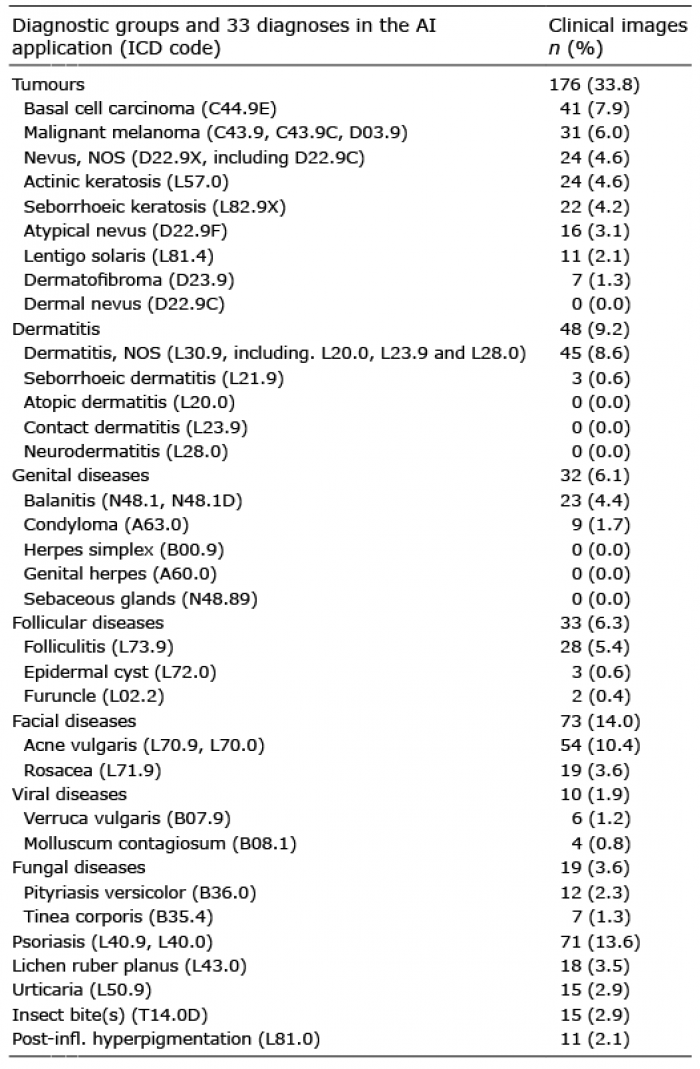

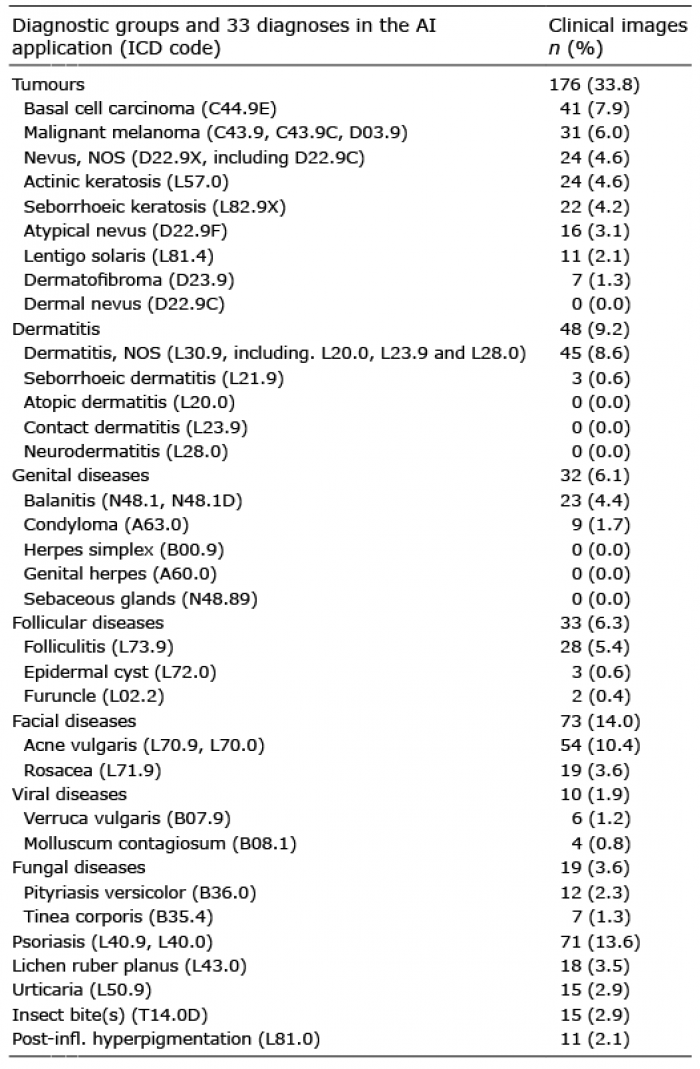

Table I. The included diagnoses. Diagnostic groups and the 33 diagnoses in the Skin Image Search™ application with number of clinical cases in each category

1Department of Dermatology and Venereology, Institute of Clinical Sciences, Sahlgrenska Academy, University of Gothenburg, 2Region Västra Götaland, Sahlgrenska University Hospital, Department of Dermatology and Venereology, Gothenburg, 3Division of Dermatology and Venereology, Department of Clinical Sciences, Lund University, Skåne University Hospital, Lund, 4Department of Dermatology, Karlskoga Hospital, Karlskoga, Sweden, 5Department of Dermatology, Juaneda General Hospital, Menorca, Spain, 6Teladoc Health, Inc., New York, USA, and 7Departments of Pathology and Dermatology, Institutes of Biomedicine and Clinical Sciences, Sahlgrenska Academy, University of Gothenburg, Gothenburg, Sweden

Artificial intelligence (AI) algorithms for automated classification of skin diseases are available to the consumer market. Studies of their diagnostic accuracy are rare. We assessed the diagnostic accuracy of an open-access AI application (Skin Image Search™) for recognition of skin diseases. Clinical images including tumours, infective and inflammatory skin diseases were collected at the Department of Dermatology at the Sahlgrenska University Hospital and uploaded for classification by the online application. The AI algorithm classified the images giving 5 differential diagnoses, which were then compared to the diagnoses made clinically by the dermatologists and/or histologically. We included 521 images portraying 26 diagnoses. The diagnostic accuracy was 56.4% for the top 5 suggested diagnoses and 22.8% when only considering the most probable diagnosis. The level of diag-nostic accuracy varied considerably for diagnostic groups. The online application demonstrated low diagnostic accuracy compared to a dermatologist evaluation and needs further development.

Key words: artificial intelligence; online diagnostics; dermatology; skin disease.

Accepted Aug 21, 2020; Epub ahead of print Aug 27, 2020

Acta Derm Venereol 2020; 100: adv00260.

doi: 10.2340/00015555-3624

Corr: Oscar Zaar, MD, PhD, Department of Dermatology and Venereology, Sahlgrenska University Hospital, Gröna stråket 16, SE-413 45 Gothenburg, Sweden. E-mail: oscar.zaar@vgregion.se

In research settings, artificial intelligence algorithms for automated classification of skin diseases have shown promising results with a diagnostic accuracy that have performed equal to or even outperformed dermatologists. Similar online artificial intelligence applications are available to the consumer market, readily accessible to anyone with a smartphone. Nevertheless, external and independent validation investigations of the diagnostic accuracy of these applications are lacking. The studied artificial intelligence application achieved an unsatisfactory overall diagnostic accuracy. The level of diagnostic accuracy varied greatly for diagnostic groups as well as for individual diagnoses. Online automated classification systems should be further developed to ensure appropriate accuracy.

Skin diseases are common and one of the leading causes of disability worldwide (1). Population-based studies show similar overall prevalence of skin conditions of approximately 22–35% and as much as 12–15% of visits to primary care in industrialized countries relate to skin problems (2–4). However, access to dermatologists is limited. Artificial intelligence (AI) is an emerging technology with the promise of assisting clinicians in making correct healthcare decisions faster and more reliably (5–9). AI based on convolutional neural networks (CNNs) have shown results of equal or even superior diagnostic accuracy when compared to dermatologists (8, 10–13).

With the increasing development of healthcare applications for smartphones – over 165,000 in 2017 and an increasing number of dermatology-associated smartphone applications (14, 15) – there is a risk that consumers rely on some of these to get answers to their dermatological concerns (16). A recent review of the medical smartphone applications for the assessment of melanoma showed a pooled specificity of 84% and sensitivity of 73% (14). However, various studies evaluating smartphone applications in the use of skin cancer detection have shown poor performances (17–23). While smartphone applications can potentially be informative for the general public, the results of using such systems may generate concern or provide a false sense of reassurance, perhaps leading to missed or delayed diagnosis. Thus, reliable technology and correct information is fundamental. Studies on smartphone applications with a more generalized dermatological assessment covering both skin cancer and inflammatory dermatoses including genital diseases are lacking.

The Skin Image Search™ (SIS) application (iDoc24, CA, USA, available from https://www.firstderm.com/ai-dermatology/) is an AI algorithm evolved by training a CNN with a dataset of approximately 58,000 smartphone images obtained from the consumers. The application includes 33 common skin diseases (Table I). The program is free, available online, and can be accessed with a web browser (24). The user uploads an image showing a skin condition, and the online service searches its dataset for matching images and provides a list with the top 5 most likely differential diagnoses, in falling order from 1–5, hereby referred to as the “top 5”. According to the information provided by the company, the AI algorithm has shown results of 40% diagnostic accuracy in ranking the correct diagnosis as the top most likely diagnosis. The accuracy in the top 5, i.e. the presence of the true diagnosis among the 5 proposed differential diagnoses, is claimed to be 80% (25). Nevertheless, independent and systematic validation of the application has not been performed.

The aim of the study was to analyse the diagnostic accuracy of the SIS application.

Table I. The included diagnoses. Diagnostic groups and the 33 diagnoses in the Skin Image Search™ application with number of clinical cases in each category

This study was designed as an observational study carried out at the Department of Dermatology and Venereology at Sahlgrenska University Hospital in Gothenburg from April 2018 to May 2019. The images included were obtained from patients giving their consent to have their skin conditions photographed. Clinical photos were taken by professional health care workers using smartphone cameras (iPhone 8 plus, Apple Inc., CA, USA). Dermoscopy images were not included.

The data collection was done by consecutive sampling, a method in which images were collected until the desired sample size of at least 20 images per diagnosis of the 33 different diagnoses available in the SIS application and/or a total of 500 images were acquired. The diagnosis had to be clinically confirmed by a dermatologist and/or histopathological examination. All images with an ambiguous diagnosis were excluded. All images were anonymous since they contained no personal data and the patient could not be recognized by the image. When necessary this was achieved by cropping the images in Microsoft Paint (Microsoft corporation, WA, USA). Images of poor quality or images portraying different kinds of solitary lesions (e.g. nevus near a seborrhoeic keratosis) were excluded or if possible cropped in a way that made the confirmed diagnosis become the main visible lesion in the image. Non-assessable images due to low image quality (e.g. bad light-ing or blurry focus) or unfit image composition (e.g. camera not aimed at the lesion, image taken from an inadequate distance or angle) were excluded. Images showing a ruler or pen marking (e.g. circles or arrows) were excluded, as well as an applied bandage or sticking plaster partially or completely covering the lesion in question. Images showing lesions undergoing some kind of surgical intervention (e.g. shaving, excision or biopsy), or bleeding following such treatment, were also excluded.

The following additional information was collected from the patient charts: patient ID, age, gender, skin type, image type (overview or macro), body site and final diagnosis (defined as the clinical diagnosis or, when available, the histopathological diagnosis).

Collected images were uploaded for automated classification by the SIS application using a demonstration version that used the same algorithm as the online version. The demonstration version was provided by the developer and was more flexible compared to the regular version available online, allowing the classification process to be made by uploading a single image, instead of two images used in the online version. However, according to the developer, the online version analyses only one selected image and thus there was assumed to be no difference in the accuracy between the demonstration version and the online version.

The top 5 differential diagnoses provided by the SIS application were imported into Microsoft Excel (Microsoft corporation, WA, USA). Matching the image’s confirmed diagnosis with the top 5, each classification was given a score from 1–5, depending on which position the confirmed diagnosis had. If the confirmed diagnosis was absent from the top 5, the classification was given a score of 0 and marked as incorrectly diagnosed. If multiple differential diagnoses were correct for the same image, the best score was chosen. The overall diagnostic accuracy was analysed as well as the diagnostic accuracy for separate diagnoses and diagnostic groups with a common aetiology (e.g. tumours, viral diseases and fungal diseases) or body site (e.g. genital diseases and facial dermatoses).

All data were analysed using R version 3.0.3 (The R Foundation for Statistical Computing, Vienna, Austria). Fisher’s exact test was applied in the analysis of contingency tables. Ethical approval was granted by the ethical review board of Linköping (approval number: 2019-02186).

A total of 891 clinical images were collected. Of these, 241 images were excluded according to the exclusion criteria above and 129 images were dismissed as duplicates, resulting in a final number of 521 images obtained from 215 patients. Often several images were included from the same patient. The median age for the population (131 males [60.9%] and 84 females [39.1%]) was 53.4 years

(range 1–94 years) with the three most common body sites being trunk, face/neck, and lower extremities, accounting for 23.0%, 21.5%, and 16.9%, respectively. The skin phototypes were the following: type I 16.7%, II 59.5%, III 17.2%, IV 4.2%, V 0.9%, VI 1.4%. The patients’ diagnoses were confirmed by histopathological examination in 52.6% (113/215) of the cases and in the remaining 47.4% (102/215) cases the diagnoses were made clinically by dermatologists.

In the combined results of all 521 images uploaded for classification, the AI algorithm was able to classify the correct diagnosis among the top 5 in 56.4% (294/521) of the images and failed to give the correct diagnosis in 43.6% (227/521) of the images. The SIS application gave the true diagnosis ranked as the most likely in 22.8% (119/521) of the images (Fig. 1).

Fig. 1. The overall diagnostic accuracy of the Skin Image Search™ application. The scores 0–5 are colour-coded by different shades of blue with darker shades portraying more accurate scores. The artificial intelligence application was able to place the correct diagnosis among the top 5 in 56.4% (294/521) of the images and failed to give the correct diagnosis in 43.6% (227/521) of the images. CI: confidence interval.

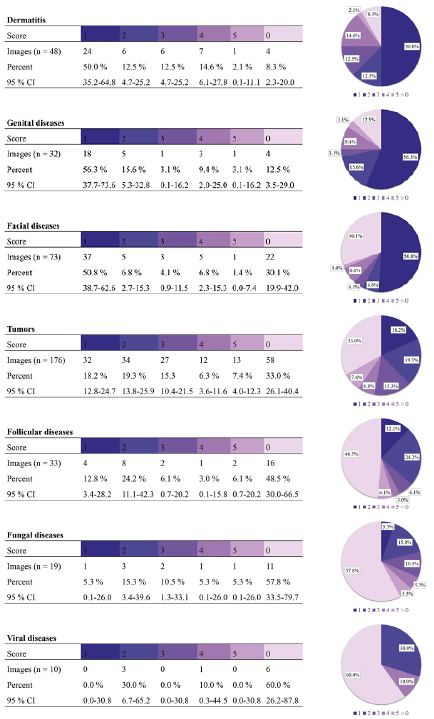

When focusing only on diagnostic groups containing several diagnoses, the SIS application had most success in accurately classifying (i.e. the correct diagnosis present in the top 5) dermatitis (91.7%, 44/48) and genital diseases (87.5%, 28/32), followed by facial diseases (68.9%, 51/73) and tumours (67.0%, 118/176). The least successful in diagnostic accuracy were viral diseases (40.0%, 4/10), fungal diseases (42.1%, 8/19) and follicular diseases (48.5%, 17/33) (Fig. 2).

Fig. 2. Diagnostic accuracy for the diagnostic groups.

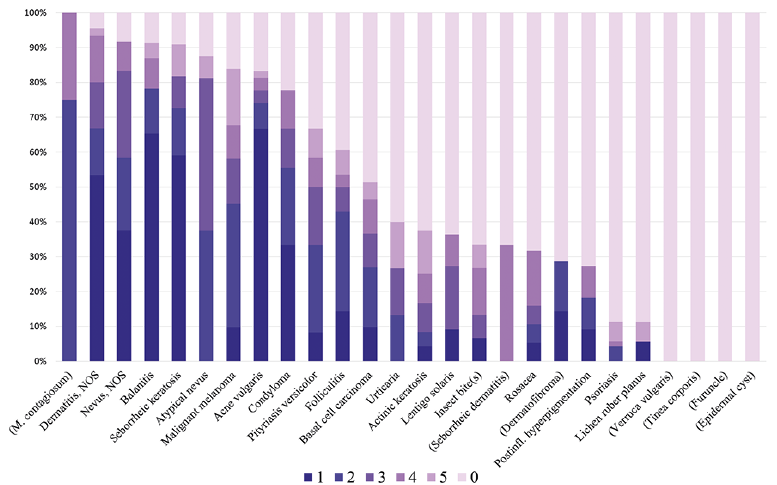

For individual diagnosis, it was easiest for the system to detect dermatitis, tumours (e.g. nevus, atypical nevus, malignant melanoma and seborrhoeic keratosis), common genital diseases (e.g. balanitis and condyloma) and the facial disease acne, all having a diagnostic accuracy of ≥ 75% in the top 5, (Fig. 3). Acne had the highest frequency of number 1 scores (66.7%, 36/54) followed by balanitis (65.2%, 15/23) and seborrhoeic keratosis (59.1%, 13/22). Inferior results were shown in diagnostic accuracy for psoriasis (11.3%, 8/71), lichen planus (11.1%, 2/18) and post-inflammatory hyperpigmentation (27.3%, 3/11). Interestingly, melanoma also had a relatively low frequency of number 1 scores (9.7%, 3/31).

Fig. 3. Diagnostic accuracy for individual diagnoses. The bars are encoded with different colours depending on the given scores (0–5). Diagnoses with <10 images uploaded for classification are presented in parentheses.

A total of 521 images were collected and classified by the online open-access AI application. The results show an overall diagnostic accuracy of 56.4% in the top 5, and 22.8% accuracy for the most probable diagnosis (i.e. score=1). This would probably be interpreted as a poor diagnostic performance if translated into use in clinical practice as a diagnostic aid.

Several studies have shown poor agreement between dermatologist and online smartphone application with low sensitivity and specificity (18, 21–23). Our results broaden the research field by assessing not only skin cancer detection with smartphone applications but also including inflammatory and genital diseases.

Computer vision has successfully been used in other medical fields including radiology (26). In a recent survey 77.3% of dermatologists agreed that AI will improve dermatology (27). However, there are still challenges in the development of AI in dermatology. In contrast to radiology, where all the images are taken with professional equipment, photographs used in dermatological diagnostics vary in quality and can be taken by both health care professionals and patients themselves. The variation in the quality of the images can cause problems when training and testing the AI algorithms.

The majority of patients with skin conditions are handled and treated by primary care physicians. Since access to specialized care is limited, only the minority of patients visit a dermatologist. The images collected in this study were all gathered from the Department of Dermatology and Venereology at Sahlgrenska University Hospital, and it is questionable whether these images are representative of the images taken by the general public which were used to develop the SIS application. One could expect that the clinical images used in this study portrayed more advanced or rare variations of skin diseases. Such imparity could explain why some diagnoses had very low diagnostic accuracy. An example could be psoriasis, for which the AI app achieved very low accuracy with only 11.3% (8/71) of the images in the top 5. Further, it has been observed in earlier studies that diagnostic accuracy tends to drop when tested on an independent dataset (28).

Clinical photos, including images taken by smart-phones, can vary significantly in terms of image factors such as distance to object, angle, quality and light, making the classification process considerably more challenging (16, 21, 26). Esteva et al. (10) overcame this challenge to some extent by demonstrating a new approach in CNN for melanoma classification based on clinical images without restrictions to man-made criteria (e.g. asymmetry, border, colour and size) that matched the diagnostic performance of dermatologists. Without the restriction of specialized equipment, such systems could potentially provide widespread automated diagnostic aid for all medical practitioners and would also be available for anyone with access to a smartphone (14). However, further development is needed before these applications can be used by consumers.

Strengths in our study comprised a considerably large number of clinical images, compared to similar studies on smartphone applications (17–21). The selection process of images was carried out with consecutive sampling and predefined inclusion and exclusion criteria. Histopathological verification of the diagnoses was available in more than half of the patients. In the rest of the cases the diagnoses were made by the specialists working in a university hospital. However, a limitation was that we did not use a standardized consensus opinion including several dermatologists for the diagnoses that were not histologically verified. Unfortunately, not all 33 diagnoses in the AI application were represented and 8 diagnoses were represented by fewer than 10 images, making it difficult to draw any conclusions for these individual diagnoses. The images were not evenly distributed among the individual diagnoses, which could create a bias in the overall accuracy approaching those holding more images. As many as 241 images were excluded, which may illustrate the presence of a large percentage of clinical images of poor quality and inadequate image composition. A limitation was that all the images were collected in the same department.

Future studies are warranted in order to more accurately evaluate the studied application, foremost having enough data to represent all diagnoses. It is important to make independent studies of diagnostic accuracy for online classification systems within dermatology and venereology since they are available for anyone in the general population and could have an impact on the users’ health. Further, because the development of such systems will be continuous, studies have to be renewed and updated to keep up with the current quality of such services.

In conclusion, the AI application in this study achieved an unsatisfactory level of overall diagnostic accuracy. The level of diagnostic accuracy varied greatly for diagnostic groups as well as for individual diagnoses. Our study examined one of the online automated classification systems available to consumers, and users of this application are advised to approach this technology with curiosity about the developing field and with an understanding that this system does not replace the need for clinical examination by clinicians.

Conflict of interest: OZ, KS, AO, AS and MAT previously worked as consultants for iDoc24, the company developing Skin Image SearchTM.